Is astrophotography pixel scale and resolution the same? Can we increase resolution just by making pixels smaller? In some cases we can, but quite often we cannot.

Two setups

For this article’s scope, I assume the sensors I describe have the same noises and the same sensitivity (quantum efficiency). I also do not deal with optics aberrations here. At start let’s consider two setups with the same pixel scale. At both setups we have camera with 1Mpx resolution (1000x1000px):

- first sensor with 10×10 um pixels with telescope 100 mm aperture and 1000 mm focal length – so 100/1000

- second camera with 5×5 um pixels with telescope 50 mm aperture and 500 mm focal length – so 50/500

Both setups have the same focal ratio and also provide images with the same 1Mpx resolution and the same pixel scale about 2″/px. Do they also provide the same quality images? One could say yes. But these setups differ with fundamental parameter – with aperture. In astronomy aperture rules, that is why telescopes are first of all large, not fast. 100mm aperture telescope will collect four times more photons than 50mm aperture telescope, because it has four times larger surface that collects light. And since the pixel scale is the same in both setups, so each pixel from first setup will get four times more photons, and the image it will provide will be much better quality – signal to noise ratio (SNR) will be better.

Aperture effect

There are few thoughts that come from this example:

- larger aperture setup will give better quality images (in terms of SNR)

- when you attach 10um pixel camera to 100/1000 telescope you will get the same result as with 5um camera to 100/500 telescope. And this result will be much better than for 5um camera and 50/500 telescope

- aperture is the most important factor, focal ratio is secondary value. In real life (especially among amateurs) people tend to use fast instruments due to few reasons. Fast instruments are smaller and lightweight, so more handy and require also smaller mount. But more important is the fact, that for fast instruments you can use smaller sensors to achieve larger field of view. And smaller sensors are much cheaper. Fast instruments their own drawbacks and are expensive, but this is out of scope of this entry.

Focal reducer effect

But, but, doesn’t “when you attach 10um pixel camera to 100/1000 telescope you will get the same result as with 5um camera to 100/500 telescope” sound like focal reducer? Sure it does. 10um pixel camera will give exactly the same result as 5um camera used with 0.5x focal reducer.

Binning effect

But, but, doesn’t “when you attach 10um pixel camera to 100/1000 telescope you will get the same result as with 5um camera to 100/500 telescope” also sound like binning? Again – yes. Binning is just the increase of pixel size. Signal collected from adjacent pixels is transferred to one output register and then read from camera. 5um pixel camera attached to 100/500 telescope will give the same quality image as the same camera working in binx2 mode attached to 100/1000 telescope. SNR will be the same, but field of view will change of course, because when we use binx2 mode then effective resolution will be 500x500px.

Image resolution

We can calculate pixel scale for given setup with the formula below:

scale [“/px] = 206.3 * pixel size [um] / focal length [mm]

When we consider imaging setup it is good to find out what factors are limiting our capabilities and to to fight them back. For low pixel resolution setups (3-4 and more arcsec/px) optics resolution limit is of low importance. In these cases the pixel scale itself is limiting factor. At very low pixel resolution (6 and more arcsec/px) you may observe, that light from some stars lands only in one pixel. It happens for example at images made with 50-150mm telephoto lenses – most of the stars have the same size and look like sand grains. But these are pure aesthetic issues, I will later show how we can fight with it.

When pixel scale becomes higher – 3 arcsec/px and less – more and more limiting factors starts to show up:

- atmospherical seeing. Usually for non-premium locations it is in the range 2-3″/px

- mount tracking. Depends on mount capabilities. Well adjusted low budget mount like HEQ5 or EQ6 is capable to do guided tracking with RMS error less than 1″

- optics resolution. Few different equations describe theoretical optics resolution. Most common is Rayleigh limit, where for yellow 550nm light resolution expressed in arc seconds is given by 138 / aperture [mm] . It is often called diffraction limit. This is of course theoretical limit, in real optics it will be always worse

- at the end of this train we have a camera, that needs to sample this image to digital form

We can assume with good accuracy that sum of all these factors is square root of sum squared. So for example for setup with 138mm aperture, EQ6 mount and 3″ average seeing we will get:

(12 + 12 + 32) 1/2 = 3.3 arc seconds

So in this example we may notice, that seeing is the most limiting factor. For my current setup this equation works quite well. Last night during focusing I reached FHWM star diameter of 2.2″ (optics + seeing), and for long exposed frames FHWM was average 2.6″ (optics + seeing + tracking). Guiding RMS was 1.1″, so the total setup resolution should be about 2.46″ – that is little bit less than measured 2.6″. But you need to remember, that during long exposure seeing was not constant.

So, some preliminary conclusions:

- even top quality low aperture telescopes (60-80mm with diffraction limit at 1.8-2.3″ level) will be resolution limiting factor (next to seeing). On one hand these kind of telescopes are not so sensitive for seeing fluctuations. On the other hand during good seeing it will not be able to take advantage of stable atmosphere

- tracking of well regulated EQ6 mount is quite good, and it will not be limiting factor when compared to average seeing. On premium locations where seeing reaches 1″ quite often it is definitely worth to have better mount.

Camera sampling (over and under)

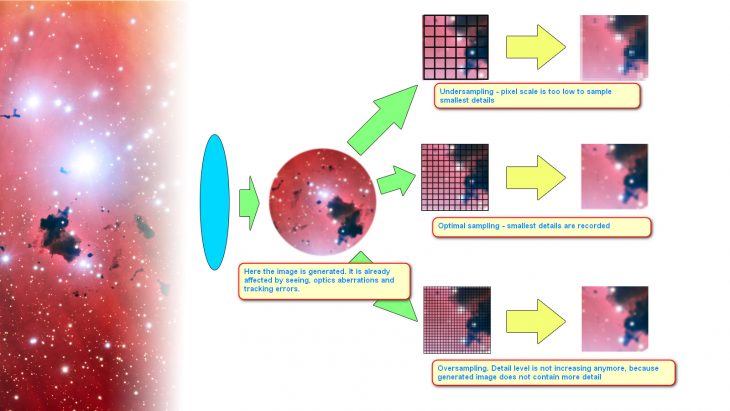

And then at the end of the optical train there is camera sensor that needs to capture image generated by optics. Image at this point is already affected by seeing, optics and tracking errors. Only task that left for sensor is to sample image and transform it to digital form. Can anything go wrong here? We can sample image with too low or too high resolution. Nyquist condition defines optimal sampling frequency. It says we need to sample signal with at least two times higher frequency (resolution) than frequency (resolution) present in the signal.

Example 1 – 130mm telescope

Let’s take for example my previous imaging setup. 130mm aperture, 740mm focal length, EQ6 mount. When seeing is good I can get 2.5″ star FWHM at long exposed image. When seeing is bad, final FWHM can be closer to 4″, but we need to calculate sampling for best possible conditions. My setup pixel scale (Atik383 camera) is 1.5″/px, so sampling rate is 2.5/1.5 = 1.7. It is little bit less than optimal 2.0, and the image is little bit undersampled. But very little. When image is more undersampled we can improve stacked picture using drizzle algorithm. It is available in several software packages (MaxIm DL or PixInsight).

Example 2 – 65mm telescope

Another example is popular refractor TS65/420 working with HEQ5. When we attach there full frame camera with 11000 sensor we get nice wide field imaging setup. Final resolution will contain: optics (2.3″), tracking (1″) and seeing (3″) – and these sum up to value about 4″. 11000 sensor has 9um pixels that gives us pixel scale 4.4″/px. Sampling here is at level 0.9 and the final stacked image quality can be definitely improved when using drizzle.

And what about opposite scenario, when image is oversampled? This is the case for example with ASI1600 CMOS camera and SCT8″ telescope, when pixel scale is about 0.4″/px and in average seeing conditions we will get sampling at level 7.5 – that is much oversampled. For oversampled images following things happen:

- detail level do not increase anymore. Sampling is not limiting factor here

- field of view is lower. This is usually not welcome

- in some extent SNR is lowered – depends on camera read noise. It is not a big problem for cameras with low read noise, like CMOS cameras

That’s why both oversampling and undersampling are not profitable. We can improve undersampled image quality when we stack them using drizzle. For oversampled images we can use focal reducers or use binning. One thing worth to remember is that pixel scale and image resolution are totally independent qualities. Image is generated by optics and is also affected also by tracking errors and seeing, and usually these factors determine image resolution.

But this is only theory, that maybe someone would like to know. In real life the number of available sensor types is limited. I would estimate, that 80% of amateur astroimaging market is covered with maybe dozen sensor models. One thing you need to know is that there is no universal setup. Also when we do pretty pictures resolution is not the most important aspect. More important is the whole picture and nobody will examine it using magnifying glass trying to split tight doubles. Most imaging setups for aesthetic photography is undersampled (to achieve large field of view), so drizzle can help here. But when you do something else than this kind of astrophotography (like astrometry or photometry), you can try to achieve optimal pixel scale for imaging setup.

Some conclusions

- factors responsible for resolution of image that is generated at the sensor surface are: seeing, aperture and optics quality, mount tracking. But…

- image resolution can be also limited with small pixel scale (camera with large pixels). This scenario is not advisable.

- image generated at sensor surface is then sampled by sensor pixels. Optimal sampling is 2 pixels for image resolution

- oversampling does not increase image detail, lowers field of view, and adds some additional (but small) amount of noise

- undersampling decrease image detail level

Clear skies!

Related posts:

4 Comments

Add comment Cancel reply

This site uses Akismet to reduce spam. Learn how your comment data is processed.

Hello again from Montreal,

What a great article. Thanks to your methodical approach and using real examples, now I get it.

Your conclusion sums it up for principal but your real life examples and simple calculations did it for me.

Respectfully,

Henri-Julien

Truly fantastic article! Thank you!

Here’s how I understand the stucture and will need to learn thes concepts and formulae:

These concepts and firmulae are composed of:

1. Foundational units, like:

– Focal lenth,

– Objective diameter

– Pixel size

2. Compound units, like:

– *F-ratio:

–> focal length / diameter

– Pixel scale (” of sky/pixel):

–> 206.3 * pixel size um / focal length mm

3. Multi-compound units built on two or more compound building-block units, like:

— Oversampling

— Undersampling

For me to truly understand, I have to:

1. Create a hieararchy chart, with definitions of every base, compound and multi-compound unit.

2. Learn the meanings of the base units and use this to memorize which of them go into each compound unit.

3. When I’ve really learned #2, then I’ll learn the multi-compound concepts.

I’m fortunate. As in the first example, my telescope is a 130mm diameter refractor, an A-P EDT130. It’s F/L is 1085mm, F/8.35.

With the A-P .75 focal reducer, the it’s reduced to F6.25 or 814mm FL. This requires only a small adjustment from the 740mm FL telescope used in the example.

My camera is an Atik 490EX with 3.69 um pixels vs the Atik 383 with 5.4 um pixels, but it’s easy for me to adjust as it’s just one value.

The best compliment I can pay the author is that, out of the *many* articles I’ve read on this subject, his is the one I will be re-reading and referring to, again and again many times, over the coming years.

Thank you again for this great article!

Michael Milligan

Layton, Utah

Great summary!

For me the important takeaway is to see the system as a whole with all factors considered, camera, optics, mount, seeing. A lot of forum posts and articles overfocus on camera vs scope theory and forget seeing and tracking.

I am getting more serious about astrophotography and am in the market to buy a camera and wide-field APO refractor (80mm class). Typical of many people with engineering background, I have been over-analyzing the camera/telescope selection process! Your article is fantastic and has put my mind at ease over what gear to buy. The real-world impact of optical limitations and seeing limitations on image quality and required pixel scale are key and what many people fail to consider. Thank you for a great article and I will be using this as my “go-to” reference for this topic.